Hello soil spectroscopists!

Under the SoilSpec4GG initiative, we started this year an inter-laboratory spectroscopy ring trial for comparing the variability of spectral response across different instruments. Variations among spectrometers can be a barrier to routine use of soil spectroscopy with centralized spectral libraries, where the misalignment of secondary instruments might yield unreliable predictions if they have contrasting characteristics compared to the primary or central instrument.

In this spectroscopy ring trial, the same set of samples (standards) have been shared across a number of laboratories. For this project, we are using 60 samples that originated from process control samples of the National Soil Survey Center (NSSC) Kellogg Soil Survey Laboratory (KSSL), and 10 samples from the North American Proficient Test. They were all prepared at Woodwell Climate Research Center as fine earth (<2 mm) or finely milled (<180 mesh) for making possible the scanning with VNIR and MIR instruments, respectively.

Standard samples, therefore, can help in the monitoring of a laboratory’s standard operating procedure (SOP) and support some quick intervention to avoid that the new measurements are drifted from the previous records. More importantly, this set of standards can also be used for leveraging the integration of local or regional datasets with existing soil spectral libraries that keep growing in size and representation of soil types, like the USDA NRCS NCSS-KSSL MIR library. In fact, the samples from this research can form the basis of building calibration transfer models with the KSSL MIR soil spectral library.

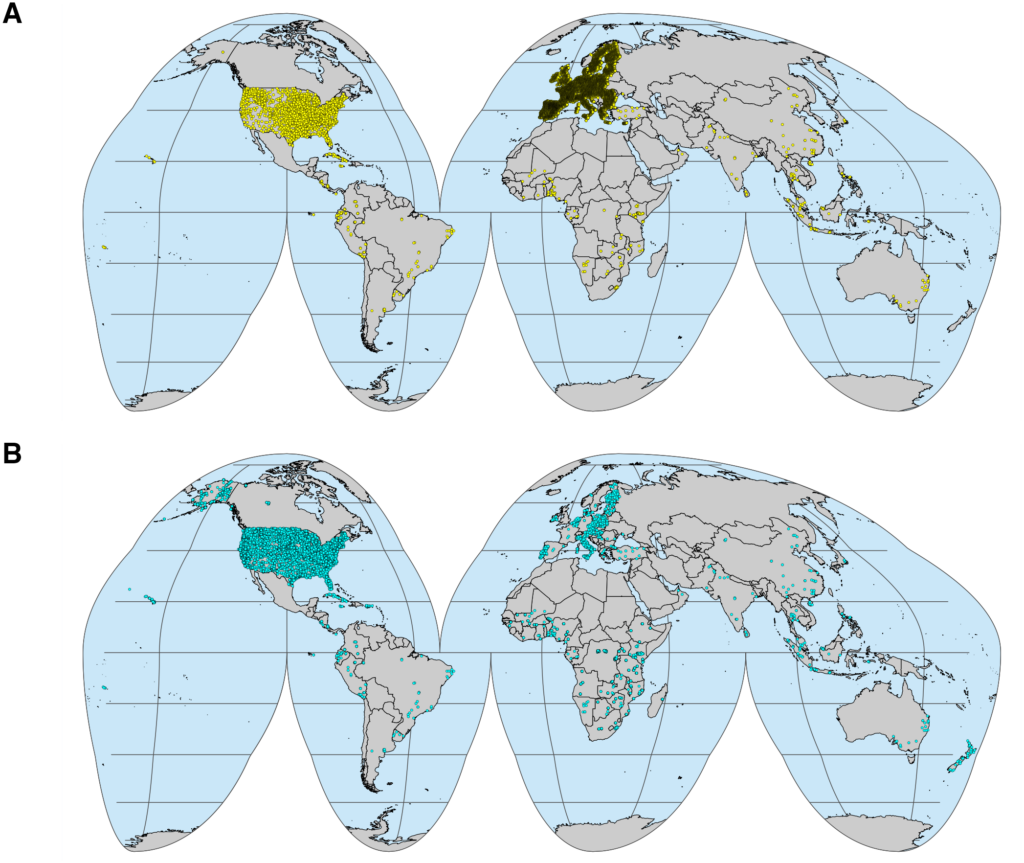

Spectral standardization is not a new topic in the field of soil spectroscopy, where some research have been published recently showing that it may help for delivering more consistent results (e.g., Dangal et al., 2020; Pittaki-Chrysodonta et al., 2021). The difference now is that we are having an exceptional opportunity to expand this idea to a broader range of conditions and instruments available around the world (Figure 1).

The current list of Diffuse Reflectance Infrared Fourier Transform (DRIFT) MIR participants includes AgroCares (NLD), Argonne (USA), CSU-SoIL (USA), DAR (LSO), ETHZ-SAE (CHE), IAEA (AUT), KSSL (USA), LandCare (NZL), MSU (USA), OSU (USA), Rothamsted (GBR), Scion (NZL), UGhent (BEL), UIUC (USA), USP (BRA), UWisc (USA), Woodwell (USA). Others laboratories have shared other types of spectra (Attenuated Total Reflection – ATR MIR) that we are also interested in assessing, but unfortunately, they will not be fully tested with a calibration transfer mode with the KSSL MIR library.

Figure 1. MIR instruments participating in the ring trial.

The VNIR assessment is being co-developed with the IEEE P4005 working group that is responsible for standards and protocols for soil spectroscopy, since some additional procedures are included such as the use of an internal soil standard. In turn, we are internally advancing the analysis of the MIR spectral range with the contribution and feedback from all the participants of the MIR network. For this, a systematic evaluation of preprocessing, spectral standardization methods, and model types has been proposed in order to overcome this variability when building predictive models.

Preliminary exploratory data analysis (Figure 2) has revealed that some significant differences are found especially for those laboratories that have contrasting characteristics compared to the KSSL (instrument number 16). Spectral standardization really helped to align those different spectra to the reference instrument (i.e. KSSL Bruker Vertex 70).

Figure 2. Dissimilarity analysis of Standard Normal Variate spectra before and after the spectral standardization with Spectral Subspace Transform (SST) for different MIR instruments participating in the ring trial.

When tested within a predictive modeling framework, we found that all instruments can deliver good predictions if calibrated internally. When using models developed on the KSSL MIR library as the calibration set, some unsatisfactory performance was found for those instruments that don’t share similar characteristics to the KSSL. Overall, we found that local models (i.e. Memory Based Learning) deliver better predictions because they are less sensitive to those variations compared to global models (i.e. partial least squares regression). For many but not all instruments, standard normal variate (SNV) preprocessing (normalization across spectrum) helped to reduce variations enough to produce good predictions, but for other more dissimilar instruments, spectral standardization appears to be necessary to achieve good unbiased predictions.

Figure 3. Predictive performance measured as Lin’s Concordance Correlation Coefficient (CCC) for organic carbon and clay for internal 10-fold cross validation (Int-PLSR), PLSR and MBL models using the KSSL library as the training set (CT acronym), with and without spectral standardization (afterSST acronym).

Overall, these findings are highly encouraging. First, all instruments are capable of building good models from spectra collected on that instrument. Second, models built using the KSSL library could be applied directly to most spectra collected on other similar instruments (in this case, the same manufacturer) using only SNV preprocessing to align the spectra. Third, when spectra are more dissimilar, calibration transfer was able to greatly improve predictive performance for many but not all instruments.

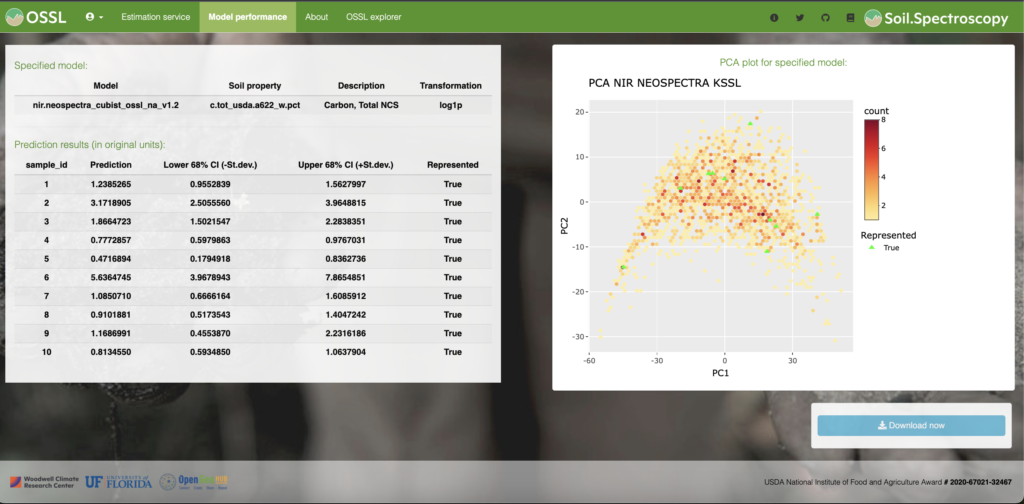

We plan on writing a full report, paper, and recommendations on what instruments are most compatible with the KSSL library. The findings from this exercise will be integrated into the OSSL engine to improve the confidence users have in predictive performance.